Assessment: Is your enterprise ready for AI customer service?

Before choosing the right AI customer service solution for enterprise scale, use this assessment to make sure your organization is set up for long-term success.

Learn More

AI is evolving fast. And when you’re building the infrastructure behind it, your engineering standards need to move just as quickly.

At Ada, we were facing a common challenge: thousands of lines of legacy code needed refactoring, with virtually no test coverage to make that refactoring safe.

This legacy code was holding back our development velocity. We knew that modernizing it would significantly improve our codebase, and AI tools could handle much of the conversion work effectively. But there was one major roadblock: how do you safely refactor code when there’s no way to verify that your changes won't introduce regressions?

The best practice is to write comprehensive tests first, but when you're staring down thousands of lines of code, that could mean weeks of work before any real progress begins. It's a frustrating cycle: the testing barrier makes refactoring feel too risky, so technical debt continues to build.

That's when I considered a different approach: what if AI could help us build the safety harness we needed?

I started by testing several tools: DevinAI, GitHub Copilot, and ChatGPT. But Claude Code , Anthropic’s command-line AI assistant for coding, stood out. Its control and iterative refinement features made it the best fit for this challenge.

My hypothesis was simple: if Claude Code could generate usable tests quickly, we could safely refactor legacy code at scale. In our case, that meant converting legacy Redux code from reducer/action patterns to modern Redux Toolkit slices with full test coverage.

If successful, this approach could transform how we handle large refactoring projects, turning weeks of manual test writing into a much more manageable process.

To set a baseline, I gave Claude Code a small React component with minimal instruction—no context about our testing patterns or conventions. The results highlighted the challenge ahead.

While Claude Code demonstrated a solid understanding of what functionality needed testing and wrote reasonable test descriptions, the generated tests were completely unusable:

Fixing the tests would’ve taken longer than writing them from scratch. But instead of quitting, I treated the output as valuable data on what to do next.

I approached the problem like any other software issue: break it down, learn from failure, and iterate.

I created a CLAUDE.md instruction file with specific instructions about our testing patterns and philosophy. After each failed attempt, I would:

This process became quite educational. Not just for Claude Code, but for myself and the team, too. I had to articulate testing practices that I'd internalized over years of experience, making our team's implicit knowledge explicit.

One of the most persistent issues was Claude Code's tendency to mock extensively. Here's an early instruction I added:

But the mocking continued. I learned that AI tools like Claude Code work much better when you give them positive instructions about what they should do in specific scenarios, rather than just telling them what they shouldn't do.

But positive instructions alone still weren't enough. While I could tell Claude to "use MSW handlers and real store configurations," it still struggled without concrete examples of which specific helpers to use or how to structure the test setup.

The turning point came when I realized I needed to be extremely specific about our project's tooling and conventions, down to exact file paths and code snippets. Here's how the mocking instructions evolved:

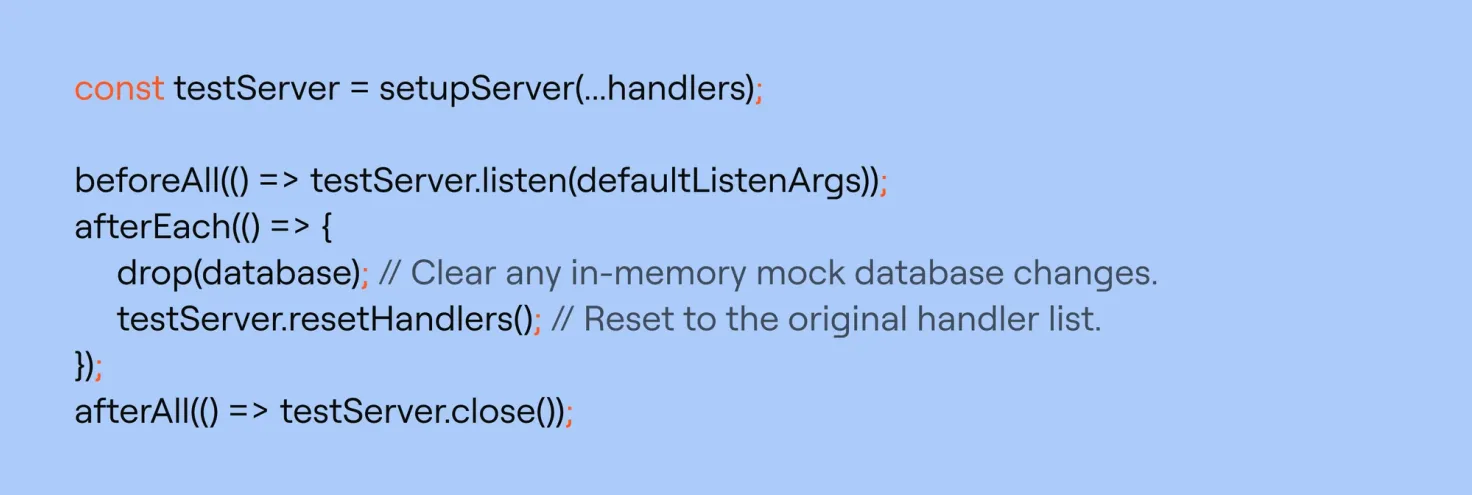

Organize handlers in a central mocks directory:

Use this recommended MSW setup snippet:

It wasn’t enough to say “use MSW." Claude needed the file structure, boilerplate, and conventions. This taught me that successful AI collaboration depends on making implicit team knowledge explicit.

The instruction file grew significantly over time, but each addition addressed a failure and made the tool stronger

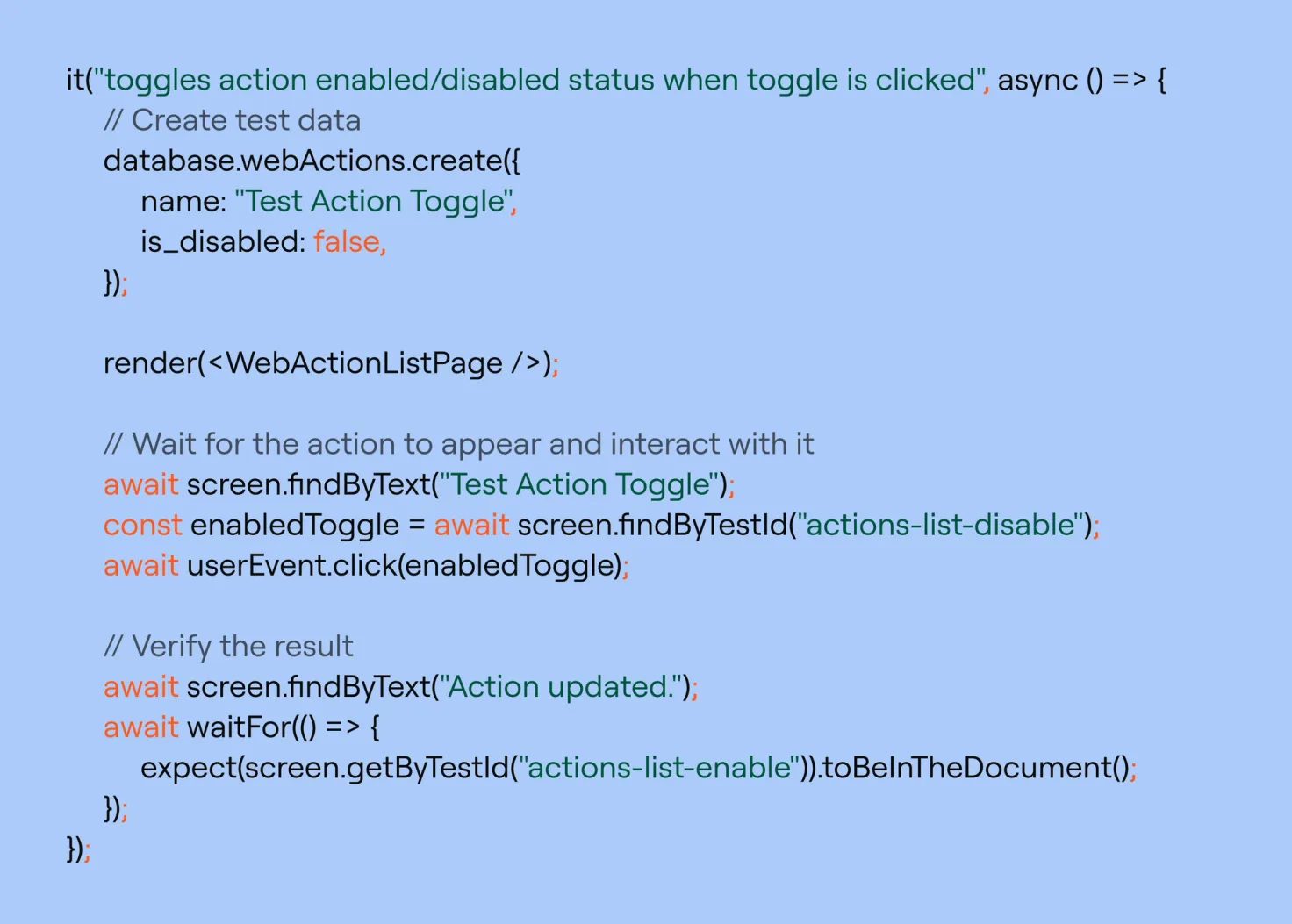

After several iterations, I tested Claude Code on a similar component.

The transformation was remarkable. What used to take 2-3 hours now took 15-20 minutes.

The generated tests were functional, achieved over 80% code coverage, and followed all of our conventions.

The tests used our render helpers, handled async operations correctly, focused on user behavior—not implementation details—and were robust against UI changes.

Claude Code even resolved ESLint and TypeScript issues and verified that tests passed before moving on.

This wasn’t just a better output—it was a reusable system. And I realized the method could extend far beyond our Redux migration.

The biggest benefit wasn’t speed, it was scale.

Claude Code became a teaching tool. Engineers with less testing experience could now write high-quality tests and learn our best practices in the process.

Instead of asking senior developers to explain MSW setups or querying strategies one-on-one, we encoded that knowledge in a way Claude—and our team—could apply repeatedly.

With Claude, we’re not just writing tests faster, we’re raising the floor on test quality across the team.

It didn’t stop there. Since implementing this approach, I've found Claude Code invaluable for much more than just writing new tests. It's become my go-to tool for:

It’s not just a code generator—it’s an extension of our engineering brain. The investment we made in teaching it our way of working continues to pay off in time saved, quality gained, and knowledge shared.

If you want to try this approach with your own team, here are the key steps to get started:

The most powerful AI applications aren't about replacing human developers, they're about amplifying our capabilities and spreading expertise across the team.

By investing time upfront in teaching Claude Code our testing standards, we found a tool that helps experienced developers move faster and helps less experienced developers learn better practices.

When the cost of building a safety net drops from weeks to days, entire categories of tech debt become tackleable. Risky refactors become routine. And your AI tools stop being novelties—they start becoming teammates.

Claude helped us break through our testing barrier. Not because it was brilliant out of the box, but because we taught it how to be useful.

That’s the real promise of AI in software engineering—not to replace humans, but to multiply our impact.

Join Ada at the forefront of AI customer service, as we build truly disruptive technology for some of the biggest brands in the world.

See careers