Assessment: Is your enterprise ready for AI customer service?

Before choosing the right AI customer service solution for enterprise scale, use this assessment to make sure your organization is set up for long-term success.

Learn More

We've witnessed remarkable breakthroughs in artificial intelligence — many that have revolutionized industries across the board. From healthcare to finance to customer service , AI has become an indispensable tool for businesses looking to streamline operations and enhance experiences.

However, as with any powerful technology, AI comes with its own set of challenges . One of the most perplexing is the phenomenon of AI hallucinations.

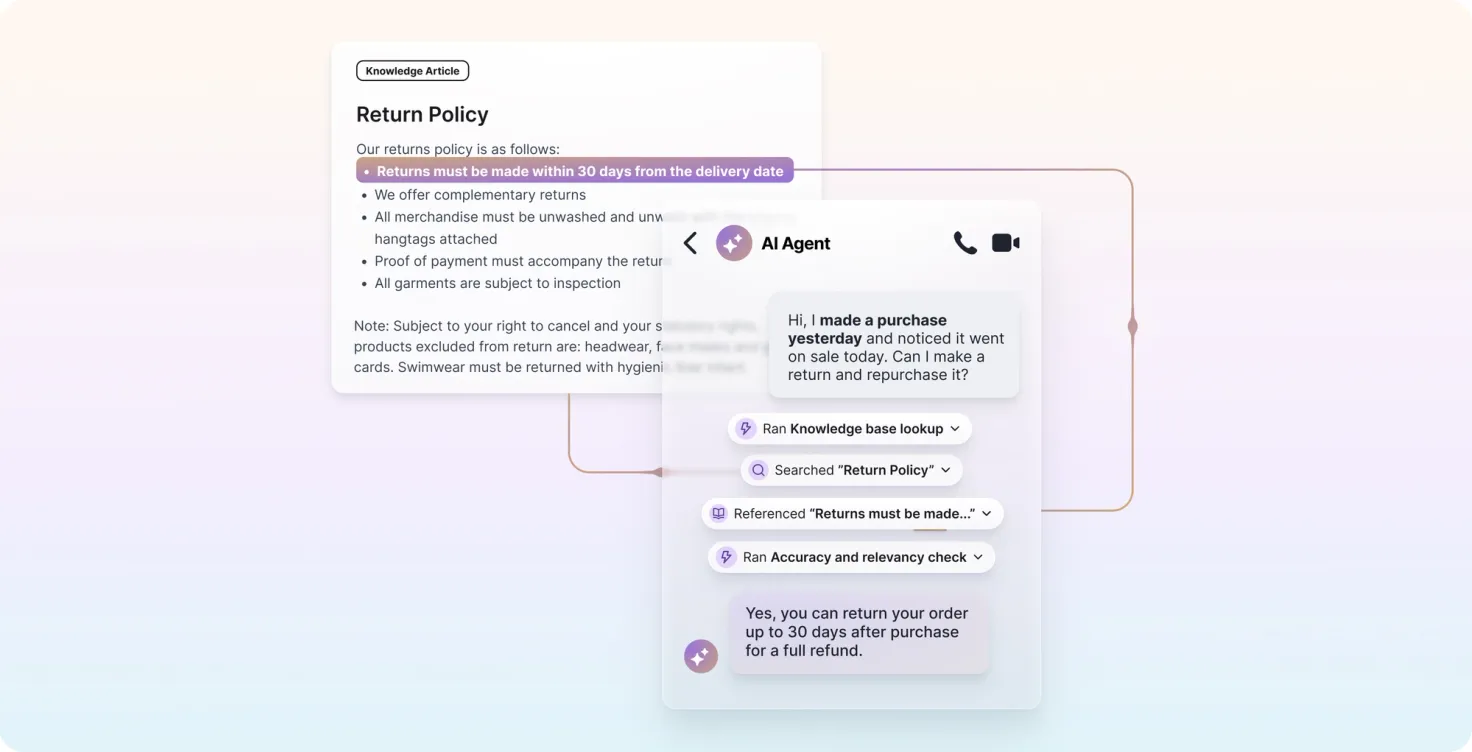

It could go a little something like this: You're chatting with an AI agent, asking about a company's return policy. Suddenly, the AI confidently informs you that you can return any item within 90 days, no questions asked. Sounds great, right? There's just one tiny problem – the actual return policy is only 30 days. You've just experienced an AI hallucination .

There’s no magic solution to eliminating hallucinations, but there are ways to circumvent them in your own AI agents. One of the most notable hallucination prevention techniques is called “grounding.” Let’s explore what grounding is in the context of hallucinations in AI.

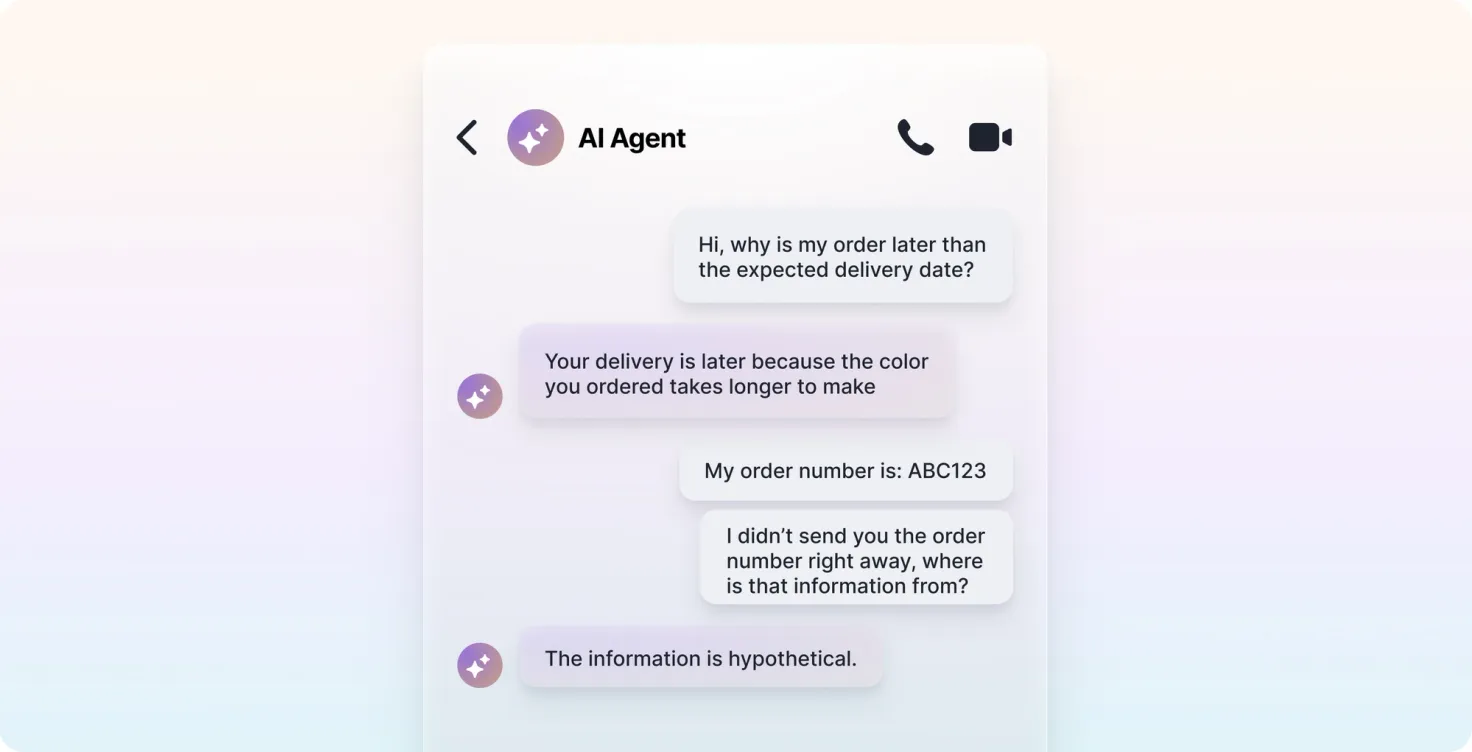

Let's start with the basics: What are AI hallucinations ? Simply put, hallucinations occur when an AI model generates false or misleading information and presents it as fact.

It's like that one friend who always embellishes their stories at parties – except this friend is a highly sophisticated language model with access to vast amounts of information.

Hallucinations are more common than you might think. According to recent studies, AI hallucinations can occur in anywhere from 3% to 10% of responses generated by large language models. Some estimates even suggest that chatbots may hallucinate up to 27% of the time.

So why do these hallucinations happen ? It comes down to how the AI models are trained.

AI models learn by analyzing massive amounts of data and identifying patterns. Sometimes, in their eagerness to provide a coherent response, they may fill in gaps with information that seems plausible but isn't necessarily true. It's like playing a high-stakes game of Mad Libs, where the AI is desperately trying to complete the sentence.

Now that we've established the problem, let's talk more about one of the solutions: grounding. In the world of AI, grounding is like giving your model a solid foundation in reality. It's the process of connecting AI outputs to verifiable sources of information, making it more likely that the model's responses are anchored in fact rather than fiction.

Think of grounding as putting a leash on your AI agent’s imagination. It's not about stifling creativity — it's about channeling that creativity in a way that's actually useful and accurate. After all, we want our AI assistants to be more like helpful librarians and less like unreliable narrators.

Let's explore some of the most effective grounding techniques that are helping to tame the wild imaginations of AI models.

RAG is like an AI model’s personal research assistant. Instead of relying solely on its pre-trained knowledge, RAG allows the model to pull information from external, verified sources in real-time.

Here's how it works:

The beauty of RAG is that it significantly reduces the chance of hallucination by grounding the AI's responses in up-to-date, accurate information. It's like having a fact-checker on speed dial.

When an AI agent uses RAG, it’s able to cross-verify information across multiple reliable sources and use various verification mechanisms to ensure the accuracy of its responses.

In the world of AI, how you ask a question can make a huge difference in the output. Prompt engineering is the practice of optimizing a prompt (input instructions and context) to achieve a desired outcome. Ideally, good prompts produce accurate and relevant outputs. When giving instructions to your AI agent , you need to be mindful of how you craft your directions—in the same way you would be mindful of providing employee feedback. It needs to be constructive and provide a clear direction to move forward.

Here are some key strategies in prompt engineering your AI agent:

By optimizing our prompts, we can help steer the AI away from hallucinations and towards factual responses.

As we continue to refine these grounding techniques, we're moving towards a future where AI can be a more reliable and trustworthy partner in our daily lives and business operations. In fact, despite concerns about AI usage, 65% of consumers still trust businesses that employ AI technology.

But let's not get ahead of ourselves. While we're making great strides in taming AI hallucinations, it's important to remember that no system is perfect.

AI hallucinations are a real challenge, but with clever grounding techniques, we can keep our artificial friends firmly rooted in reality. It's an ongoing process of refinement and improvement, but the potential benefits are enormous.

Go deeper. Discover more tips for prevention and get actionable insight on how to quickly identify and correct them.

Get the guide