Assessment: Is your enterprise ready for AI customer service?

Before choosing the right AI customer service solution for enterprise scale, use this assessment to make sure your organization is set up for long-term success.

Learn More

Generative AI is a game-changer for customer service. It solves the most pressing customer service problems — scalability, speed, and accuracy. But like every technology out there, generative AI requires its users to manage its downsides — in this case, hallucinations.

Fact is, all large language models (LLMs) are prone to hallucinations. 86% of online users have experienced them.

But hallucinations can be embarrassing for companies, and in extreme cases, even lead to losses. Take Chevrolet for example — the company’s AI-powered chatbot accepted an offer for $1 for the 2024 Chevrolet Tahoe, which has a starting MSRP of $58,195.

So, should you wait until AI evolves to use it for customer service? No, and here’s why: AI is the dominant force in the current customer service landscape. You need AI to deliver on a modern customer’s expectations. While the technology matures, hedge for risks instead of avoiding the technology altogether.

AI hallucinations are incorrect or misleading outputs generated by AI models. Hallucinations can happen for a variety of reasons, including training data and training methods, which can lead models to contain biases or the willingness to always give a confident answer. It’s not just about what data is fed into an LLM, it’s also about how the model is trained.

Here’s how generative models like GPT-4 work: The LLMs used in generative AI tools are trained on large datasets from multiple sources, including ebooks, personal blogs, Reddit, and social media posts. They use this data to produce information that’s not always true but seems plausible.

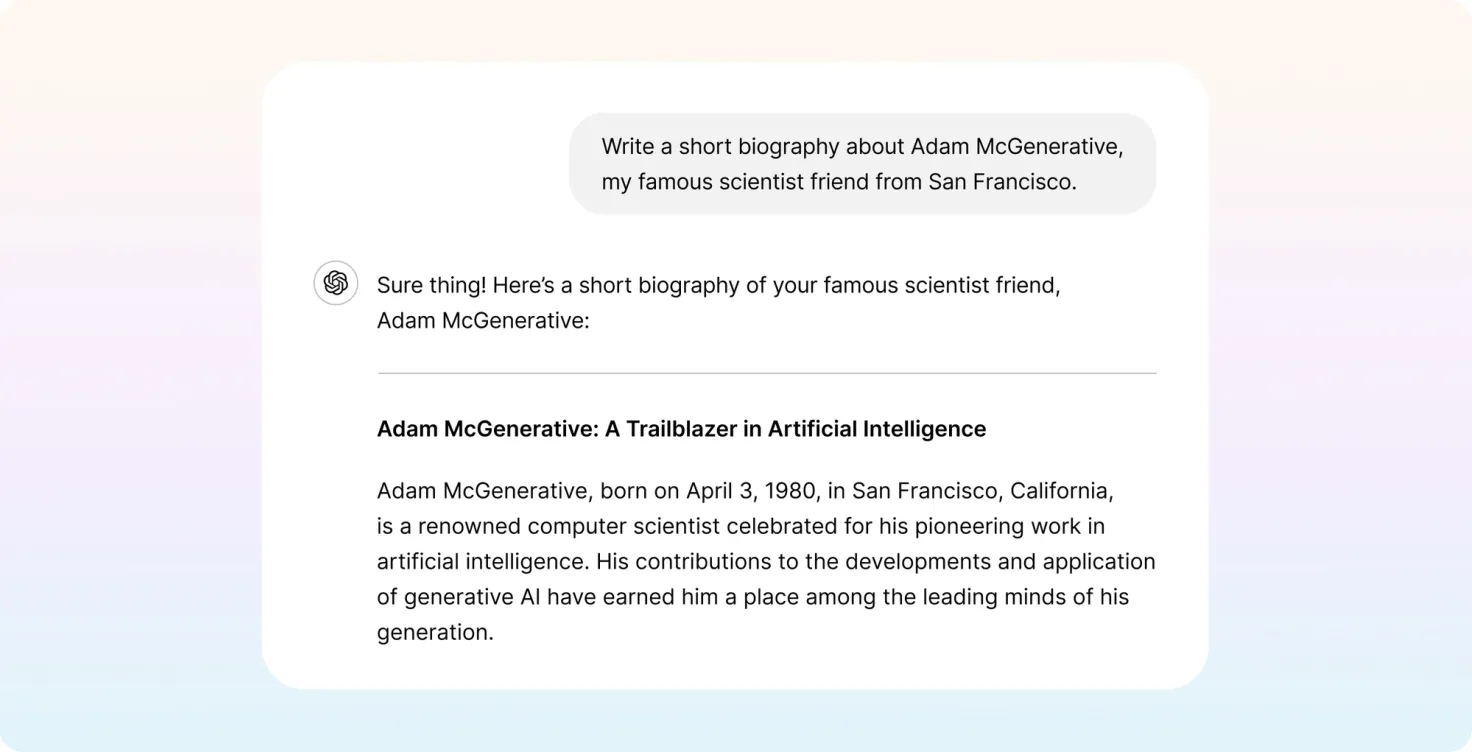

For example, if I ask GPT-4 to write a short biography about a non-existent friend, it strings together information in a way that seems deceptively accurate but is imaginary. While this is a feature if you’re looking for creative answers, it’s a bug when you see it from a customer support perspective:

Essentially, GPT-4 predicts the next word in a sentence based on patterns in the training data. Sometimes, the pattern matching goes haywire and leads to coherent but factually incorrect or irrelevant responses. Other reasons for hallucinations include AI’s inability to understand the world like humans, lacking a sense of reality and common sense, and the tendency to prioritize creative novel outputs.

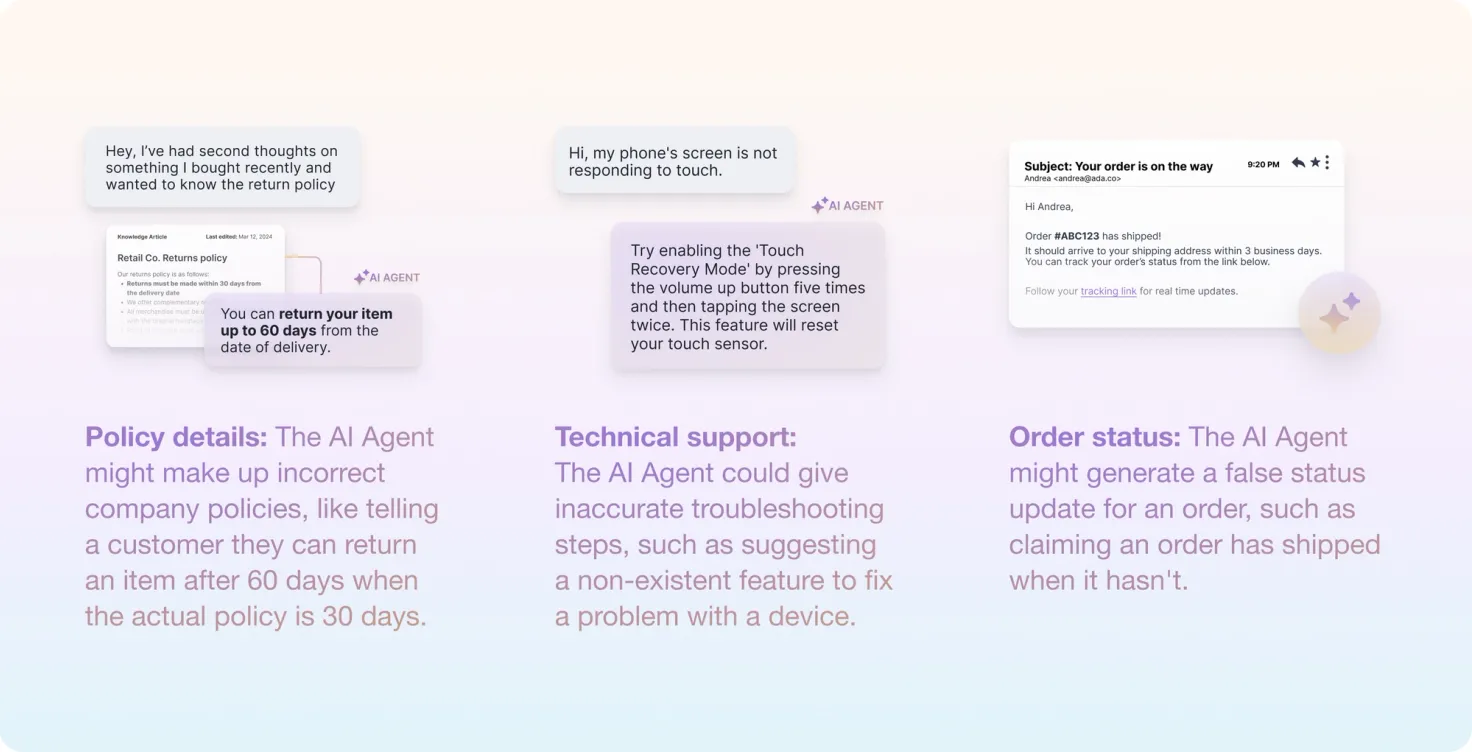

Incorrect information about Adam McGenerative isn’t too harmful. But if you use AI for customer service, hallucinations could spell trouble for business. If the AI doesn’t get its facts straight before it opens its digital mouth, your customers might lose trust in your brand and churn. Remember that hallucinations are not always AI’s fault — inconsistencies in your knowledge base could also be a reason why AI delivers incorrect information to customers.

Fortunately, there are ways to ground an AI tool’s responses in reality. The best way to do this is to train your AI agent to avoid unvalidated public sources like Reddit and social media posts when stating facts. Double-down on training the LLM to pull information from reliable sources, such as your knowledge base, internal documentation, and previously resolved customer interactions.

Suppose you offer an AI proposal generator. A customer asks your AI agent if signed e-documents are legally binding. Here, you want your AI agent to pull information from your knowledge base, especially if your clientele primarily belongs to a specific industry. Pulling information from unreliable or outdated sources can potentially put your client and, by the same token, your reputation at risk.

Generative AI tools hallucinate anywhere between 2.5 to 22.4% of the time, according to Vectara . These hallucinations can harm your relationships with customers, spread misinformation, and require customers to invest extra effort just to get information. Here are some common types of hallucinations :

AI can generate factually incorrect information that sounds completely plausible. If your customers ask your AI agent about the potential benefits of a cleaning product available on your ecommerce store, it might tell them, “Our robot cleaner helps you automatically dust and mop tiles, carpets, and ceilings in your house.” Unless your robot cleaner is Spiderman, claiming that your product can clean the ceiling is factually incorrect.

Remember when Google Bard (now Google Gemini) was asked about the Webb Space Telescope’s discoveries and Bard incorrectly stated that the telescope took pictures of an exoplanet? Even ChatGPT has made up some bizarre statements — for example, it falsely claimed that an Australian politician (who was in fact the whistleblower) was guilty of bribery.

Unfortunately, there’s no crystal ball you can peep into to identify fabricated facts. Your customers will need to manually verify facts and invest time in research.

Are there steps you can take to deploy a more trustworthy and reliable AI agent? We address the issue of AI hallucinations from all angles in this webinar with AI experts, including OpenAI's Ankur Rastogi.

Watch now

AI may generate grammatically incorrect sentences or responses that don’t logically follow the conversation’s context. When a customer asks how your product’s email automation feature works, if not properly trained, managed, and maintained, AI could respond with something like, “Our tool uses chocolate chips to set up trigger-based email automation workflows.”

Likely, the response won’t be this blatantly incoherent. To identify incoherent responses, look for logical inconsistencies, awkward sentence structures, nonsensical statements, and irrelevant information.

AI may lose the context of the conversation and generate irrelevant information. For example, if the customer asks the AI about how your tool knows if someone opened an email, it may respond, “The email automation tool uses a pixel inserted in emails to track if someone opened it. Gmail also launched a great AI writing feature last year to help write emails faster.” The transition from email opens to Gmail’s new AI feature is abrupt and irrelevant to the original question.

If you’ve noticed the AI tool throwing abrupt or irrelevant information or discontinuity from previous interactions when responding to customers, that’s a sign of context dropping. These signs may be subtle at times so there’s no guarantee you’ll catch the context switch.

AI may attribute discoveries, quotes, or events to the wrong person. So if someone were to ask who coined the idea of AI, the AI might say, “The idea of AI was coined by Neil Armstrong.” It wasn’t. It was coined by John McCarthy. The only way your customers can identify misattribution is to manually verify the information from a credible source.

This is when AI generates broad responses that lack detail and precision. When your customer asks the AI agent to explain the process of setting up the email automation tool , the AI agent’s response might be, “Sign into your account, compose a new email, and send it to the intended recipients.”

While that’s technically true, it lacks details about how to create an account and ways to integrate the tool into your existing email tool.

Overgeneralizations are generally easy to identify. If you feel the generated response is too vague or short, the AI might be overgeneralizing. A simple Google search will help you verify this.

Temporal inconsistencies occur when AI mixes up timelines when generating responses. Suppose a customer asks the AI, “On what date was my first invoice issued?” The AI agent might respond, “Your first invoice was issued on April 25, 2005.” Now, this could be the date the company issued its first invoice instead of that customer’s first-ever invoice from your company.

In some cases, temporal inconsistencies are easy to spot. If the AI tells the customer their first invoice was issued in the 1980s, the inconsistency is pretty loud and clear. If the temporal inconsistency isn’t obvious, the customer will need to verify the dates with a Google search.

Accidental prompt injection refers to the unintended introduction of instructions to a prompt that leads to a change in the LLM’s response or behavior. Unsanitized user input and overlapping context are common causes of accidental prompt injection.

Suppose the user types the following message:

<script>alert('This is a test');</script>

While the intended system prompt might be to “Describe how to fix the broken link,” the LLM might receive a different instruction. For example, it could be instructed to process the script, which can lead to inappropriate or insecure outputs.

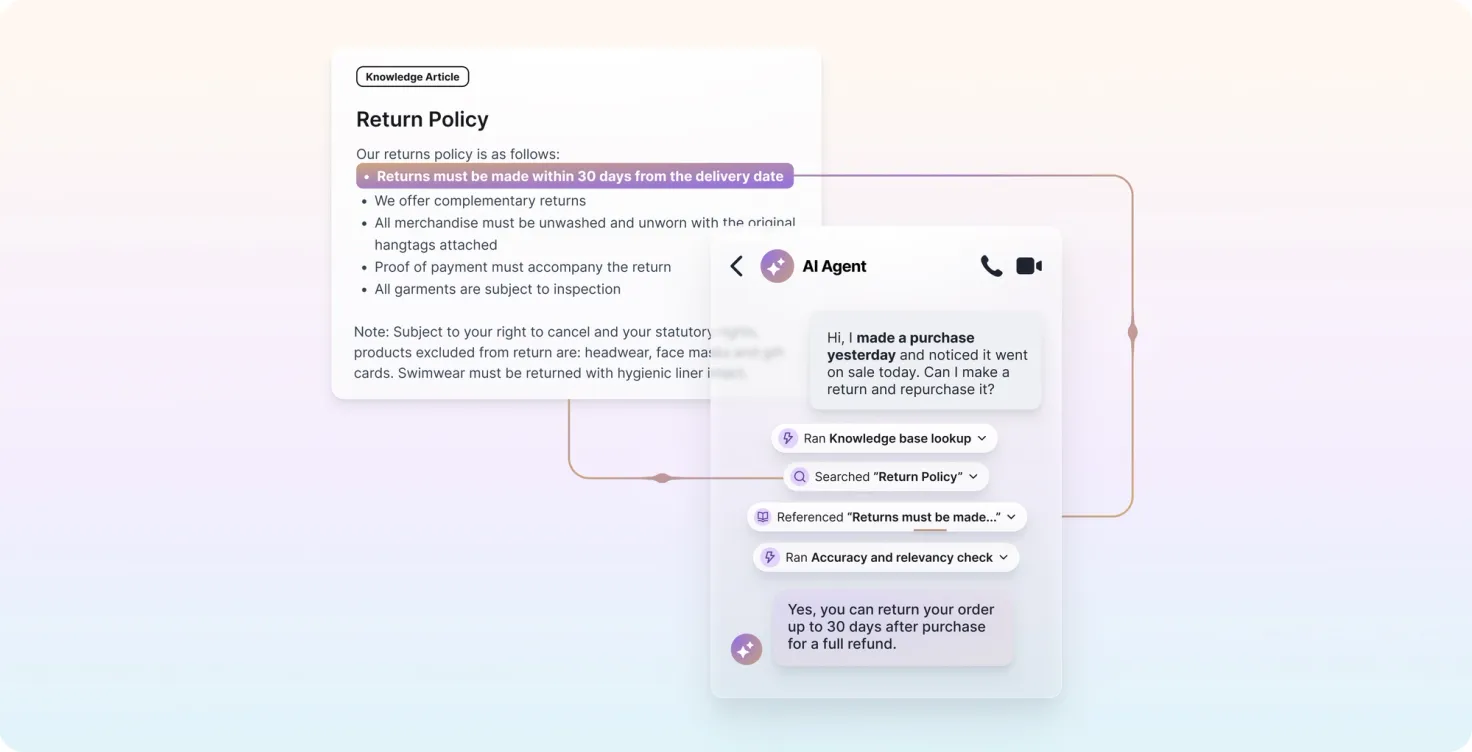

Grounding is a hallucination prevention technique that shows the LLM the right context by retrieving the most relevant information, and in turn, helps the LLM generate more accurate responses. If you’re your company’s AI manager, you can use these techniques to prevent AI hallucinations we discussed in the previous section.

There are various grounding techniques that help prevent hallucinations. Here are some commonly used techniques:

RAG is a two-headed beast — it uses a retriever and a generator to create great responses based on fact-checked information. Here’s how it works:

Think of knowledge graphs as a giant, organized mind map that connects pieces of information. It’s a network of nodes and edges — nodes are entities or concepts, like people, places, things, or ideas, and edges are the relationships between these nodes.

For example, “Adam Jones” (node), a bank’s customer, “is” (edge) a “High Net-Worth Individual” (node). Each node can have multiple properties, such as birthdate and nationality.

Then there’s the ontology or structure of the knowledge graph. The structure defines the types of nodes and edges and the possible relationships between them. Think of it as a rulebook that ensures each data point on your knowledge graph makes sense and is logically organized.

LLM prompting is a user-side grounding technique. It involves creating more specific prompts to guide, or straight-up shove, the AI agent towards correct and relevant information.

Suppose you want to ask an AI agent, “Could you share a copy of my latest invoice?” The agent might fetch the wrong invoice or the wrong customer’s invoice, but if you ask, “Could you share a copy of my last invoice? The invoice number is #1234 and my customer ID is #6789,” the AI agent is more likely to deliver a correct answer.

Here’s how users can create a prompt to ensure factually correct, relevant responses:

Researchers haven’t found a way to completely eliminate hallucinations. But computer scientists at Oxford University have made great progress.

A recent study published in the peer-reviewed scientific journal describes a new method to detect if an AI tool may be hallucinating. The method can tell if AI-generated answers are correct or incorrect roughly 79% of the time, higher than other methods by 10%. This research opens doors to deploying language models in industries where accurate and reliable information is non-negotiable, such as medicine and law.

The study was focused on a specific type of AI hallucination, where the model generates different answers to the same question but with identical wording (called confabulations). The research team developed a statistical method that estimates uncertainty based on the amount of variation between responses (measured as entropy). The method aims to identify when LLMs are uncertain about a response's meaning instead of just the phrasing of a response.

As the author of the study, Dr. Sebastian Farquhar, explains :

“With previous approaches, it wasn’t possible to tell the difference between a model being uncertain about what to say versus being uncertain about how to say it. But our new method overcomes this.”

However, this method doesn’t catch “consistent mistakes,” or mistakes that lack semantic uncertainty — these are responses where the AI is confident about an incorrect response.

While there’s no way to eliminate hallucinations, following best practices can help you manage them more effectively. Here are four best practices to follow:

When training an AI agent, take care of the following:

Taking proactive steps to prevent hallucinations can greatly impact the quality of responses. Here are two ways to do that:

Educate everyone on the customer service team about AI hallucinations. Help them understand technical details about what causes them and the best ways to tackle them.

Start by creating a clear and concise document that covers common issues like ways to spot hallucinations and steps for corrections. If possible, show them past instances when the AI hallucinations resulted in problems with customers.

Consider interactive simulations to help the team practice situations where the AI hallucinates. If possible, bring in an expert occasionally to discuss new methods to tackle hallucinations and equip the customer service team with the most efficient techniques.

Be ethical when using an AI agent by:

AI hallucinations can jeopardize your reputation and relationships with customers. If you play your cards right, an AI agent can transform your customer service processes and save you plenty of money. But it’s important to hedge — AI can produce incorrect information and amplify biases.

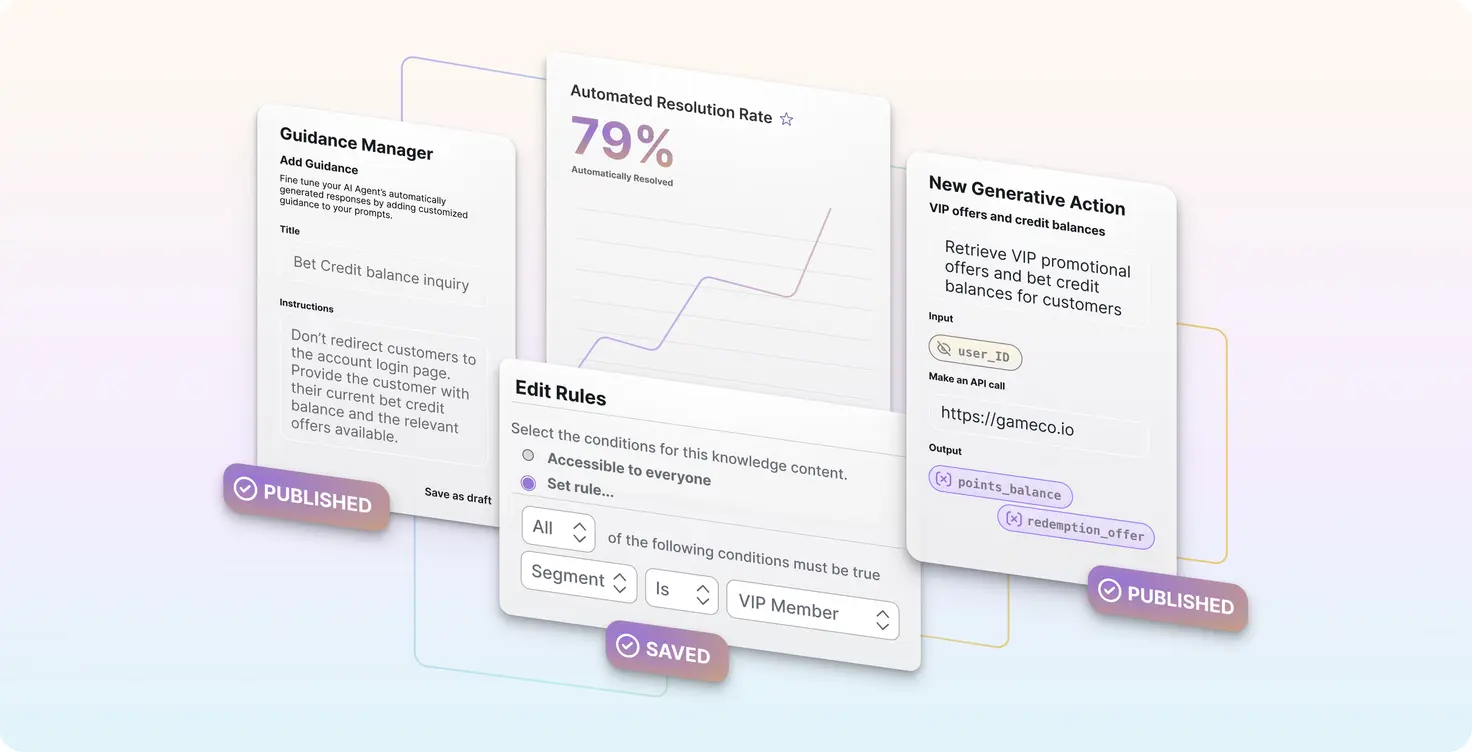

Ada’s AI Agent offers strong protection against hallucinations. It has a built-in Reasoning Engine that understands the context of the conversation and searches the knowledge base or pulls information from other tools with API calls to produce accurate information. Assuming all the information in the knowledge base and other software is accurate, there’s little chance of hallucinations.

At Ada, we aim to automate all customer interactions and minimize AI-related risks with our industry-leading AI Agent. Try Ada’s AI Agent to see how it can transform your customer service.

Go deeper. Discover more tips for prevention and get actionable insight on how to quickly identify and correct them.

Get the guide